This article is also published in Medium –

https://medium.com/@chandramanohar/evolution-of-application-integration-and-api-first-approach-15b35f4f305fEvolution of Application Integration

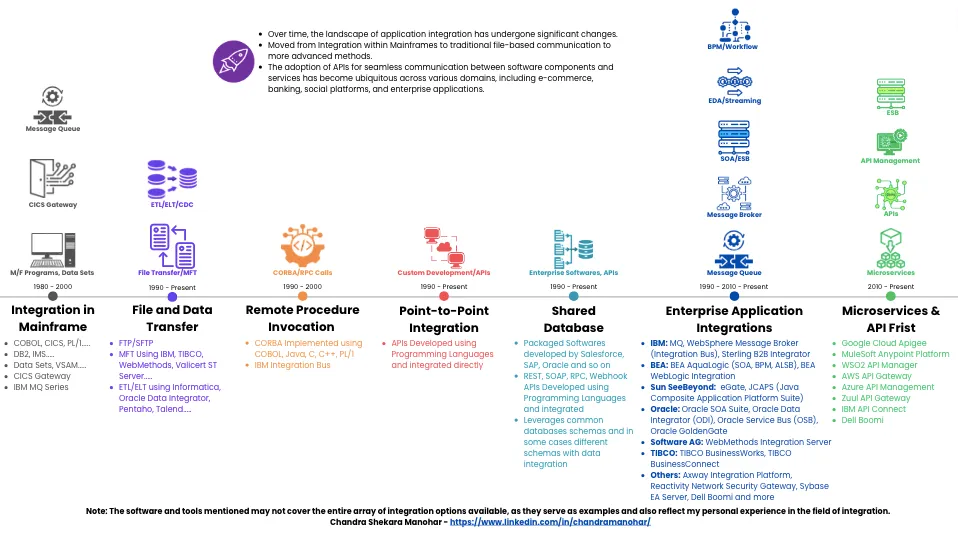

HOW APPLICATION INTEGRATION HAS EVOLVED:

1. Integrations within Mainframe Systems

The initial approach to application integration within Mainframe systems involved writing programs using COBOL, CICS, PL/1 and so on which accesses Mainframe databases (DB2, IMS and more) or file systems (VSAM, Data Sets and more) and controlled Job Control Language (JCL), Database triggers and so on. Then the use of CICS Gateway to expose CICS programs as services and IBM MQSeries came into picture for asynchronous and synchronous communication across systems.2. Data and File Transfer:

The initial approach to application integration involved file-based communication through protocols like FTP/SFTP or employed techniques like ETL (Extraction, Transformation and Load) and ELT (Extraction, Load and Transformation) for large data transfers. File Transfer leverages Managed File Transfer (MFT) software to connect and handle file processing using pre-built or custom connectors, which, in turn, leveraged APIs. Where as ETL/ELT software extracts data from databases or file systems into staging area, then transform to target structure in staging area and then loads to Data warehouse for Reports and Analytics purpose. Additionally, ETL/ELT tools expanded their capabilities to include data extraction from APIs and webhook integration. This approach remains relevant and is commonly used for:- High-volume, high-speed file transfers

- Building versatile workflows regardless of underlying technology

- ETL/ELT processes for data warehousing and database replication

- Change Data Capture (CDC)

- Batch processing and more.

3. Remote Procedure Invocation (RPC) calls

In Remote Procedure Invocation (RPC) calls, source systems will expose the functionality using well defined interface and target applications will consume or invoke these functions via remote invocation using technology such as Common Object Request Broker Architecture (CORBA). Remote Procedure Invocation (RPC) calls were prevalent in the early 1990s and were primarily used to integrate systems running on different operating systems, programming languages, and hardware. However, they are rarely used for new systems today.4. P2P Integration:

P2P integration involves direct connections between systems, making it suitable when the number of integrations is limited and when real-time integration is required. Here source system will expose functionality and target system consume or invoke this function. However, when more integrations to be developed or multiple systems to integrated, this approach becomes very complex and integrations grows exponentially. This kind of approach generally used,- Integrations are less, no need of unified visibility and quality of service is manageable.

- Real-time integration required

5. Shared Database:

Many enterprise software packages rely on a shared database approach for integration. This approach enables applications to access and update data from multiple sources through a unified database schema. It is particularly useful for maintaining data consistency across related applications. One of the difficult and challenging tasks is to develop a unified schema which meets the need of multiple applications. This approach is used when,- Updating the data used by related application from different sources

- Transaction Management systems especially packaged software for consistency all the time

6.Enterprise Application Integrations (EAI)

The evolution of Enterprise Application Integrations (EAI) has been significantly influenced by the emergence of Message Oriented Middleware (MoM) and the adoption of Service-Oriented Architecture (SOA) based on Web Services. These advancements have led the way for a new era of application integration, characterized by complete decoupling of applications, efficient service communication, and support for both synchronous and asynchronous interactions and so on. This evolution has introduced a suite of tools and techniques for seamless integration, including reliable messaging queues, messaging bus, message broker with central integration engine, service-oriented-architecture, business process management, workflow and so on. Message Queueing: Message Queueing plays a pivotal role in EAI by providing a temporary storage mechanism between senders and receivers. Messages can be persisted, offering durability and reliability. EAI leverages different processing modes:- Asynchronous Processing: Senders publish messages to a queue and proceed with other tasks while the receiver processes the request at its own pace.

- Synchronous Processing: Senders publish a message and await a response from the receiver. This mode employs request-response parameters to achieve synchronous interactions.

- Pub-Sub Processing: In this mode, senders publish messages to an exchange, and the exchange routes these messages to one or more queues. Receivers subscribe to these queues and consume messages. This approach enables handling scenarios like multiple subscribers for the same message and message sequencing.

Message Broker: Message Brokers, often associated with Hub and Spoke architecture, address the challenges of point-to-point (P2P) integration. By connecting all applications through a central Broker (the Hub) and using adapters or connectors (the Spokes) for each system, Message Brokers facilitate loose coupling of applications. However, it’s crucial to note that a centralized Message Broker can be a single point of failure and a potential bottleneck, especially under heavy loads. Proper sizing and deployment are essential to mitigate these issues. Scaling vertically can address some limitations, but it may not be sufficient for handling extensive scalability requirements.

An alternative approach involves using Message Brokers as Message Buses to enable horizontal scaling. In this scenario, each application requiring integration incorporates a lightweight adaptor that acts as a small-footprint integration engine. While this approach mitigates some of the issues associated with centralized brokers, it can introduce complexities and increase operational and maintenance overheads.

SOA with Enterprise Service Bus (ESB): ESB will be backbone of the integration using SOA which enables heterogeneous system integration with open Web Services standards. SOA encourages users to develop business functions as services, integrate them using ESB. SOA as architectural pattern and ESB as a platform, gave lot of capabilities such as Services reusability, service virtualization, protocol mediation, connectivity with applications with various connectors, message mediation, throttling, caching, security and so on.

SOA, coupled with an Enterprise Service Bus (ESB), serves as the backbone for integration in EAI. ESB facilitates heterogeneous system integration by adhering to open Web Services standards. SOA encourages the development of business functions as services, which can then be seamlessly integrated using ESB. This architectural pattern and platform combination offer numerous capabilities, including: Services Reusability, Service Virtualization, Protocol Mediation, Connectivity with Various Connectors, Message Mediation, Throttling, Caching, Security and more.

EDA with Streaming: Event-Driven Architecture (EDA) and streaming are modern approaches to designing and building software systems along with SOA with ESB.

EDA focuses on events as the primary means of communication between components. Events are generated, detected, consumed, and reacted to as significant occurrences.

Streaming involves the continuous processing of data records as they arrive, enabling real-time insights and reactions.

This approach is particularly suitable for use cases where events, such as those from IoT/IIoT sensors or social media, are generated continuously and rapidly. EDA with streaming allows for loose coupling between components, making systems more flexible and scalable.

BPM and Workflow Engine: Business Process Management (BPM) and Workflow Engines play vital roles in achieving operational efficiency. These tools organize multiple functions, services, and activities into structured business processes to attain specific business outcomes. While BPM focuses on sequencing multiple functions as a cohesive business process, Workflow Engines manage and guide users through discrete steps within functions and activities. BPM and Workflow bring a level of control and automation to business operations, enhancing efficiency and streamlining workflows.

7. Microservices Architecture & API First Approach with API Management Platform:

In the modern software architecture, the API First/Microservices Approach has gained immense popularity. This approach involves the granularization of business functionalities into distinct services within a Microservices architecture. These services, when combined, collectively provide the full spectrum of business functionalities, resulting in a complete software application. However, the key differentiator lies in how these services are exposed and orchestrated using APIs.Microservices and Exposing APIs: Within a Microservices architecture, business functions are meticulously divided into discrete services. These services can encompass everything from user authentication and data processing to payment gateways and content delivery. Each service operates independently, has its own data store, and communicates with others through well-defined APIs. These APIs are exposed with different protocols and adhere to standardized definitions, fostering interoperability and ensuring efficient communication across services.

Integration Challenges: However, merely developing APIs is insufficient to fully address the integration needs that arise when disparate systems and technologies must collaborate. To realize the potential of Microservices and APIs, a comprehensive approach to integration is required.

The Role of API Management Platform: This is where the API Management Platform comes into play. It serves as a central hub for managing, securing, and orchestrating APIs, ensuring that they function seamlessly in complex ecosystems. The API Management Platform offers two primary capabilities: API Management and Enterprise Service Bus.

- API Management: API Management involves the governance and control of APIs throughout their lifecycle. This encompasses API design, development, testing, deployment, versioning, and retirement. Key features include: API Development, Developer Experience, API Security, API Management Gateways, API Orchestration/Workflow Definitions and more.

- Enterprise Service Bus (ESB) Capabilities: In addition to API Management, the API Management Platform incorporates Enterprise Service Bus (ESB) capabilities. ESB serves as a powerful integration tool, allowing for the seamless flow of data and interactions between disparate systems.

API INTEGRATION IS FUNDAMENTAL FOR BUILDING WEB & MOBILE APPLICATIONS:

By keeping the following architectural, deployment, and design considerations in mind, onecan build a robust and successful SaaS application that aligns with vision and the needs of users and clients.An “API First” approach is a development methodology where priority is for design and build the APIs of a software system before developing the user interface or any other part of the application. This approach prioritizes the creation of a well-defined and robust API as the foundation of the software, allowing for easier integration with other systems, improved collaboration among development teams, and flexibility in adapting to changing requirements.

API Integration helps in connecting process and using external APIs within the application to leverage their functionality, data, or services. In today’s fast-paced digital landscape, API integration is a fundamental part of modern software development, especially building web and mobile applications.

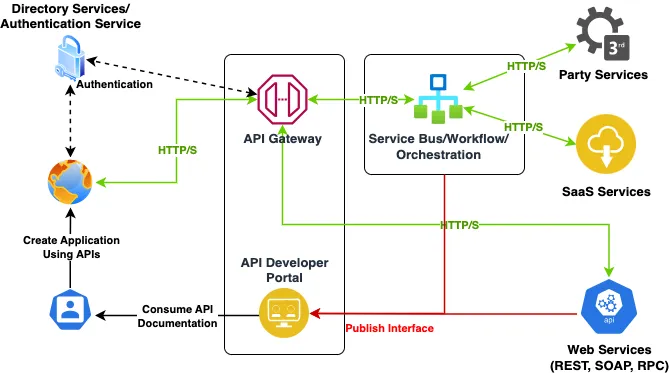

The below diagram provides high level reference architecture of using APIs in integration.

API management platforms provide a set of tools and services to help organizations create, publish, secure, monitor, and analyze their APIs. These platforms play a crucial role in ensuring the effective and efficient operation of APIs. The below are key components of API Management platforms.

API Gateway: API Gateway handles requests, routing, security, request throttling/limiting and more.

API Developer Portal: This helps in APIs documentation (including endpoints, request/response, usage details, sample code and so on), API Keys Management (requesting token/keys through the portal), Test/Sandbox environment.

API Security/Access Control: Providing Authentication and Authorization supports

API Life Cycle: Support for API Versioning, Change Management, Deployment & Version Control, Onboarding and more

API Integration: Integrate with various backend services and data sources, including microservices, databases, and legacy systems, convert between different data formats and protocols, such as REST to SOAP or RPC and so on. Also support API orchestration and workflows for the software development.

Analytics and Monitoring: Collect API usage data (traffic, response time, errors & more), Alerts and Notifications, Logging and more

Compliance & Governance: Enforce API Policies for standards and regulations, Audit trails and so on.

API TYPES:

Understanding these API types helps organizations make informed decisions about API design, access control, and the level of security required based on the specific use case and audience they are targeting.

Open/Public APIs: These are publicly available APIs with minimum restrictions and may require registration. Security is implemented using API Keys or OAuth or sometimes completely open. Open/public APIs are primarily designed for external users to access data or services provided by an organization. They are commonly used in Business-to-Consumer (B2C) scenarios where applications or services are offered directly to consumers.

Internal/Private APIs: These are exposed to internal systems, across different development teams and hidden from external users. If external users want to use these, they will become partners to leverage them as 3rd party APIs. Security is implemented using tokens or API keys or internal IAM systems. Internal/private APIs are used for enhancing productivity, promoting data and service reuse, and facilitating communication between different internal systems. They are commonly used in Application-to-Application (A2A), Business-to-Business (B2B), and some B2C scenarios.

Partner APIs: These are developed for strategic business partners and not available publicly. These require onboarding process, validation, workflow etc. to both publish or consume API through portal. Partner APIs are mainly used in B2B and some B2C scenarios where organizations collaborate closely with specific partners to share data or services. They are often used to establish deeper integrations and mutually beneficial relationships with partners.

Composite/Aggregated APIs: In these case, multiple data or services combined or orchestrated to provide unified functionalities. These are built using API platforms using Orchestration/workflow capabilities. Composite/aggregated APIs are used to simplify complex operations for developers and reduce the number of API calls required to achieve a specific task. They provide a more streamlined and efficient way to access multiple services or data sources.

CONCLUSION:

By combining API Management with ESB capabilities within an API Management Platform, organizations can unlock the full potential of their Microservices architecture. This approach not only empowers businesses to create scalable, agile, and interoperable software solutions but also ensures that these solutions integrate seamlessly with existing systems and technologies, fostering innovation and adaptability in a rapidly evolving digital landscape.

For more insights on API Management Platform capabilities, you can refer to my another blog — https://dzone.com/articles/point-of-view-on-api-platforms.